I’ve been working on Smolblog, a social web blogging app. To help me get to a minimally viable product sooner, I’ve been building it on top of WordPress. However, WordPress is built exclusively for the MySQL database, and I eventually want Smolblog to work with many different databases, especially SQLite. This means, for my own code, I need to abstract the database away.

The first pass I had at this was to simply have Query objects and services to handle those. This would effectively abstract away the entire data layer, making it completely system-agnostic. It wouldn’t even need to be a traditional database. But as I built this system out, I was making more and more assumptions about what the database and data code would look like. And while the database code was all abstracted away, I still had to write it. A lot of it. And every line I wrote using $wpdb was another line I’d have to rewrite someday.

I’ve been looking at other frameworks to use, and Laravel is by far the strongest contender. Their approach to dependency injection and services seems to line up well with how I’ve organically built Smolblog to this point. So when I found out that their database abstraction layer also included a way to use the library without taking on the entire Laravel framework, I decided to make “someday” today.

Prerequisites

- Composer: While you can use this library without using Composer, it’s very much not recommended. That being said, if you’re using this in a plugin for general use or otherwise don’t have control over your entire WordPress environment, be sure to use Mozart or some other tool to isolate the namespaces of your dependencies.

- Populated database constants: Some of the more modern WordPress setups use a connection string or other way to connect to MySQL. I didn’t find a way to get that information out of the

$wpdb constant, so this code relies on having DB_HOST and other constants from wp-config.php defined.

- PDO::MySQL: Illuminate DB uses PDO to handle databases, so you’ll need to make sure your PHP server has the PDO::MySQL extension installed. I’m using the official PHP image, so I needed to add these two lines to my

Dockerfile:

RUN docker-php-ext-install pdo_mysql

RUN docker-php-ext-enable pdo_mysql

Step 1: Dependency Injection

We’re going to use dependency injection to separate creating the database connection from using the database connection. This way the database connection can change without as much code changing.

The documentation for Laravel’s query builder involves calling their DB facade, a global class that calls a singleton instance. Digging through the documentation and code, it looks like the underlying class conforms to the Illuminate\Database\ConnectionInterface interface. So that’s what we’ll use in our service’s constructor:

use Illuminate\Database\ConnectionInterface;

class ContentEventStream implements Listener {

public function __construct(

private ConnectionInterface $db,

) {

}

}

Inside the service, we’ll follow the documentation, replacing any use of the DB facade with our $db object:

$this->db->table('content_events')->insert(['column' => 'value']);

Step 2: Connection Factory

Now that we know what we need, we need to create it.

The README for the Illuminate Database package has good starting instructions. We’ll combine those with data from wp-config.php and $wpdb:

use Illuminate\Database\Capsule\Manager;

use Illuminate\Database\ConnectionInterface;

function getLaravelConnection(): ConnectionInterface {

global $wpdb;

$capsule = new Manager();

$capsule->addConnection( [

'driver' => 'mysql',

'host' => DB_HOST,

'database' => DB_NAME,

'username' => DB_USER,

'password' => DB_PASSWORD,

'charset' => DB_CHARSET,

'prefix' => $wpdb->prefix,

] );

return $capsule->getConnection();

}

(As mentioned, we’re pulling the connection information straight from configuration. If you know how to get it from $wpdb, let me know!)

The prefix property on the connection works much the same way as WordPress' table prefix. Since we’re using the connection object to also build our queries, it will add the prefix to our queries automatically. Using this property will also use the correct tables for blogs in multisite, so data from one blog doesn’t leak into another.

For Smolblog, I only want one set of tables regardless of multisite. I also want to prefix the Smolblog-specific tables, mostly so they’re all in one place when I’m scrolling. So my prefix property looks like this:

$capsule->addConnection( [

// ...

'prefix' => $wpdb->base_prefix . 'sb_',

] );

Because I don’t want a global object or the Eloquent ORM, I can ignore the rest of the setup from the project README.

Finally, we’ll want to store this created object somewhere central. Smolblog uses a simple dependency injection container, so we’ll store it there. The first time a service that needs a database connection is created, the container will run this function and provide the object.

(Honestly, the container probably deserves a blog post of its own; you can look at the source code in the meantime.)

Step 3: Update the Schema

We have our code to build queries. We have our connection to the database. The only thing we need now is the actual tables for the database.

Here is where we can use WordPress to its full extent. We will be using the dbDelta function in particular. This will tie into WordPress' existing system for updating the database structure alongside WordPress itself.

Some plugins tie this migration code to an activation hook, but we want to be able to modify the tables even after the plugin is activated. So our process will look like this:

- Loop through the different tables we will need.

- Check the blog options for a schema version.

- If the version matches what we have in code, we’re up-to-date. Skip to the next table.

- Pass the new table schema to

dbDelta and let it do its thing.

- Save the schema version to blog options.

- Rinse and repeat for each table.

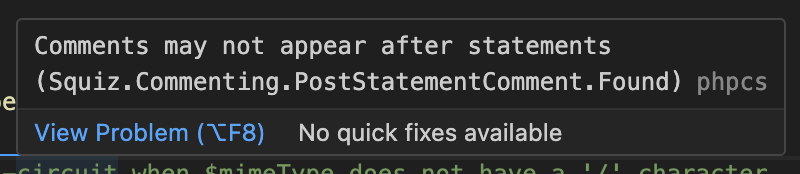

At this point, I should bring up some of the caveats with the dbDelta function. The comments on the WordPress documentation are invaluable here, especially as they point out a few things that need to be consistent with our schemas.

Because there’s so many things that need to be consistent, we’ll isolate the unique parts of our table schemas to two things:

- A name. Because every table needs one. We will declare it without the prefix.

- The fields excluding the primary key. We can have

UNIQUE indexes on other fields for a similar effect, but every table will have an auto-incrementing id field.

A series of values keyed to short strings? That sounds like an array! Here’s part of what Smolblog’s schema array looks like:

class DatabaseHelper {

public const SCHEMA = [

'content_events' => <<<EOF

event_uuid varchar(40) NOT NULL UNIQUE,

event_time varchar(30) NOT NULL,

content_uuid varchar(40) NOT NULL,

site_uuid varchar(40) NOT NULL,

user_uuid varchar(40) NOT NULL,

event_type varchar(255) NOT NULL,

payload text,

EOF,

'notes' => <<<EOF

content_uuid varchar(40) NOT NULL UNIQUE,

markdown text NOT NULL,

html text,

EOF,

];

public static function update_schema(): void {

foreach ( self::SCHEMA as $table => $fields ) {

self::table_delta( $table, $fields );

}

}

//...

}

A brief aside: Smolblog uses UUIDs for its unique identifiers, and they’re stored here as full strings in fields ending with _uuid. I ran into trouble storing them as bytes, and something in WordPress would frequently mess with my queries when I had fields named things like user_id and site_id. I’m noting this here in case you run into the same things I did.

When WordPress loads the plugin, it will call the update_schema function declared here. That function loops through the array, extracts the table name and fields, and passes them to this function:

public static function table_delta( string $table, string $fields ): void {

global $wpdb;

$table_name = $wpdb->base_prefix . 'sb_' . $table;

$charset_collate = $wpdb->get_charset_collate();

$sql = "CREATE TABLE $table_name (

id bigint(20) NOT NULL AUTO_INCREMENT,

$fields

PRIMARY KEY (id)

) $charset_collate;";

if ( md5( $sql ) === get_option( $table . '_schemaver', '' ) ) {

return;

}

require_once ABSPATH . 'wp-admin/includes/upgrade.php';

dbDelta( $sql );

update_option( $table . '_schemaver', md5( $sql ) );

}

This function takes care of the boilerplate we talked about earlier and runs the steps:

- It creates the table name using the same pattern as before: the base prefix plus

sb_.

- It creates a

CREATE TABLE SQL statement using the table name and fields. (It’s okay to build a SQL query this way because all of the data is coming from constants inside the PHP file; none of it is coming from form data or other untrusted sources.)

- It takes the MD5 hash of the SQL statement and compares that to the saved option for this table. The hash will change when the code changes, so this is a quick way to keep our code and database in-sync.

- If the database needs to be updated, it requires the correct file from WordPress Core and runs the

dbDelta function.

- Finally, it saves the MD5 hash to the blog options so we know what version the database is on.

By calculating the version using the hash of the actual SQL, we don’t have to worry about whether some other version number has been updated. This may or may not be the approach you want to take in a production application, but it has proven very useful in development. This is the same idea as using the filemtime function as the “version number” of static CSS and JavaScript in your theme.

So there we have it. We’ve used the connection information in WordPress to hook up a Laravel database connection. And at some point in the future, it’ll be that much easier to let Smolblog work with SQLite which will in turn let Smolblog work on even more web hosts. And you can use this to do whatever you want! Maybe you just wanted to transfer some skills from Laravel to WordPress. Maybe you’re just in it for the academic challenge.

One thing you can do with this is unit-test your services using an in-memory SQLite database… and I’ll leave you with that.

final class DatabaseServiceUnitTest extends \PHPUnit\Framework\TestCase {

private \Illuminate\Database\Connection $db;

private DatabaseService $subject;

protected function setUp(): void {

$manager = new \Illuminate\Database\Capsule\Manager();

$manager->addConnection([

'driver' => 'sqlite',

'database' => ':memory:',

'prefix' => '',

]);

$manager->getConnection()->getSchemaBuilder()->create(

'content_events',

function(\Illuminate\Database\Schema\Blueprint $table) {

$table->uuid('event_uuid')->primary();

$table->dateTimeTz('event_time');

$table->text('payload');

}

);

$this->db = $manager->getConnection();

$this->subject = new DatabaseService(db: $this->db);

}

public function testItPersistsAContentEvent() {

$event = new class() extends ContentEvent {

public function __construct() {

parent::__construct(

id: Identifier::fromString('8289a96d-e8c7-4c6a-8d6e-143436c59ec2'),

timestamp: new \DateTimeImmutable('2022-02-22 02:02:02+00:00'),

);

}

public function getPayload(): array {

return ['one' => 'two', 'three' => 'four'];

}

};

$this->subject->onContentEvent($event);

$expected = [

'event_uuid' => '8289a96d-e8c7-4c6a-8d6e-143436c59ec2',

'event_time' => '2022-02-22T02:02:02.000+00:00',

'payload' => '{"one":"two","three":"four"}',

];

$this->assertEquals((object)$expected, $this->db->table('content_events')->first());

$this->assertEquals(1, $this->db->table('content_events')->count());

}

}

and @madcrasher saying ‘Logging in so I don’t get deleted. Link in bio.’](https://cdn.uploads.micro.blog/644/2023/259e414ba4.jpg)